Computer Science > QUESTIONS & ANSWERS > University of California, Berkeley DS 100 sp18_hw2_solution.ipynb at master DS-100_sp18 GitHub (All)

University of California, Berkeley DS 100 sp18_hw2_solution.ipynb at master DS-100_sp18 GitHub

Document Content and Description Below

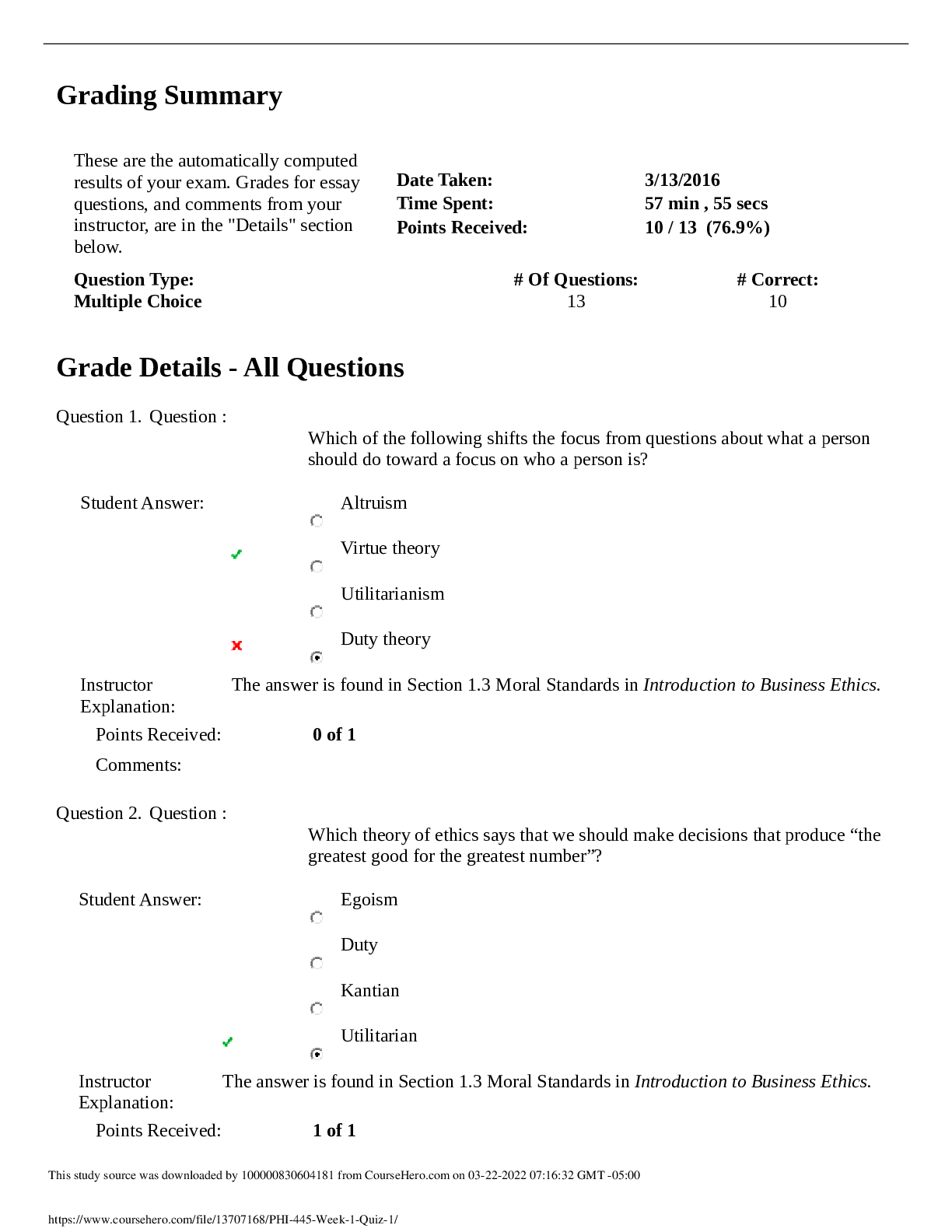

Homework 2: Food Safety Course Policies Here are some important course policies. These are also located at http://www.ds100.org/sp18/ (http://www.ds100.org/sp18/). Collaboration Policy Data scienc... e is a collaborative activity. While you may talk with others about the homework, we ask that you write your solutions individually. If you do discuss the assignments with others please include their names at the top of your solution. Due Date This assignment is due at 11:59pm Tuesday, February 6th. Instructions for submission are on the website. Homework 2: Food Safety Cleaning and Exploring Data with Pandas <img src="scoreCard.jpg" width=400> In this homework, you will investigate restaurant food safety scores for restaurants in San Francisco. Above is a sample score card for a restaurant. The scores and violation information have been made available by the San Francisco Department of Public Health, and we have made these data available to you via the DS 100 repository. The main goal for this assignment is to understand how restaurants are scored. We will walk through the various steps of exploratory data analysis to do this. To give you a sense of how we think about each discovery we make and what next steps it leads to we will provide comments and insights along the way. As we clean and explore these data, you will gain practice with: Reading simple csv files Working with data at different levels of granularity Identifying the type of data collected, missing values, anomalies, etc. Exploring characteristics and distributions of individual variables Question 0 To start the assignment, run the cell below to set up some imports and the automatic tests that we will need for this assignment: In many of these assignments (and your future adventures as a data scientist) you will use os, zipfile, pandas, numpy, matplotlib.pyplot, and seaborn. 1. Import each of these libraries as their commonly used abbreviations (e.g., pd, np, plt, and sns). 2. Don't forget to use the jupyter notebook "magic" to enable inline matploblib plots (http://ipython.readthedocs.io/en/stable/interactive/magics.html#magic-matplotlib). 3. Add the line sns.set() to make your plots look nicer. In [1]: import os import zipfile import pandas as pd import numpy as np import matplotlib.pyplot as plt import seaborn as sns %matplotlib inline sns.set() In [2]: import sys assert 'zipfile'in sys.modules assert 'pandas'in sys.modules and pd assert 'numpy'in sys.modules and np assert 'matplotlib'in sys.modules and plt assert 'seaborn'in sys.modules and sns Downloading the data As you saw in lectures, we can download data from the internet with Python. Using the utils.py file from the lectures (see link (http://www.ds100.org/sp18/assets/lectures/lec05/utils.py)), define a helper function fetch_and_cache to download the data with the following arguments: data_url: the web address to download file: the file in which to save the results data_dir: (default="data") the location to save the data f if t th fil i l d l d d4/18/2018 sp18/hw2_solution.ipynb at master · DS-100/sp18 · GitHub https://github.com/DS-100/sp18/blob/master/hw/hw2/solution/hw2_solution.ipynb 3/19 force: if true the file is always re-downloaded This function should return pathlib.Path object representing the file. In [3]: import requests from pathlib import Path def fetch_and_cache(data_url, file, data_dir="data", force=False): """ Download and cache a url and return the file object. data_url: the web address to download file: the file in which to save the results. data_dir: (default="data") the location to save the data force: if true the file is always re-downloaded return: The pathlib.Path object representing the file. """ ### BEGIN SOLUTION data_dir = Path(data_dir) data_dir.mkdir(exist_ok = True) file_path = data_dir / Path(file) # If the file already exists and we want to force a download then # delete the file first so that the creation date is correct. if force and file_path.exists(): file_path.unlink() if force or not file_path.exists(): print('Downloading...', end=' ') resp = requests.get(data_url) with file_path.open('wb') as f: f.write(resp.content) print('Done!') else: import time last_modified_time = time.ctime(file_path.stat().st_mtime) print("Using cached version last modified (UTC):", last_modified_time) return file_path ### END SOLUTION Now use the previously defined function to download the data from the following URL: http://www.ds100.org/sp18/assets/datasets/hw2- SFBusinesses.zip (http://www.ds100.org/sp18/assets/datasets/hw2-SFBusinesses.zip) In [4]: data_url = 'http://www.ds100.org/sp18/assets/datasets/hw2-SFBusinesses.zip' file_name = 'data.zip' data_dir = '.' dest_path = fetch_and_cache(data_url=data_url, data_dir=data_dir, file=file_name) print('Saved at {}'.format(dest_path)) Loading Food Safety Data To begin our investigation, we need to understand the structure of the data. Recall this involves answering questions such as Is the data in a standard format or encoding? Is the data organized in records? What are the fields in each record? There are 4 files in the data directory. Let's use Python to understand how this data is laid out. Use the zipfile library to list all the files stored in the dest_path directory. Creating a ZipFile object might be a good start (the Python docs (https://docs.python.org/3/library/zipfile.html) have further details). In [5]: # Fill in the list_files variable with a list of all the names of the files in the zip file my_zip = ... list_names = ... ### BEGIN SOLUTION my_zip = zipfile.ZipFile(dest_path, 'r') list_names = [f.filename for f in my_zip.filelist] print(list_names) ### END SOLUTION In [6]: assert isinstance(my_zip, zipfile.ZipFile) assert isinstance(list_names, list) assert all([isinstance(file, str) for file in list_names]) Using cached version last modified (UTC): Wed Feb 7 17:46:26 2018 Saved at data.zip ['violations.csv', 'businesses.csv', 'inspections.csv', 'legend.csv']4/18/2018 sp18/hw2_solution.ipynb at master · DS-100/sp18 · GitHub https://github.com/DS-100/sp18/blob/master/hw/hw2/solution/hw2_solution.ipynb 4/19 ### BEGIN HIDDEN TESTS assert set(list_names) == set(['violations.csv', 'businesses.csv', 'inspections.csv', 'legend.csv']) ### END HIDDEN TESTS Now display the files' names and their sizes. You might want to check the attributes of a ZipFile object. In [7]: ### BEGIN SOLUTION zf = zipfile.ZipFile(dest_path, 'r') for file in zf.filelist: print('{}\t{}'.format(file.filename, file.file_size)) ### END SOLUTION Question 1a From the above output we see that one of the files is relatively small. Still based on the HTML notebook (http://www.ds100.org/sp18/assets/lectures/lec03/03-live-datatables-indexes-pandas.html) of Prof. Perez, display the 5 first lines of this file. In [8]: file_to_open = ... ### BEGIN SOLUTION file_to_open = 'legend.csv' with zf.open(file_to_open) as f: for i in range(5): print(f.readline().rstrip().decode()) ### END SOLUTION In [9]: assert isinstance(file_to_open, str) ### BEGIN HIDDEN TESTS assert file_to_open == 'legend.csv' ### END HIDDEN TEST [Show More]

Last updated: 1 year ago

Preview 1 out of 19 pages

Reviews( 0 )

Document information

Connected school, study & course

About the document

Uploaded On

Jul 04, 2021

Number of pages

19

Written in

Additional information

This document has been written for:

Uploaded

Jul 04, 2021

Downloads

0

Views

64

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)